Recently I was working on getting data from a Excel spreadsheet into a Sql Server table. The excel sheet had the column names in the first row and the data set was medium in size in sense that it was about a few thousand rows. I built a SSIS package with a Data flow task which had a Excel Data Source and a OLE DB Destination for the Sql Server Database. I set up the data flow task with the mappings and did a test run of the SSIS package. During the execution there were data loading errors due to data type issues. On further research i found that the data type on excel spreadsheets were of UNICODE type and these were getting into non-unicode columns on the Sql Server table and this caused the error. In order to fix this issue I used the data conversionn task. The data conversion task is available in the data flow editor and usually sits after the source data connection. This means that the output of the source data is connected with the Data conversion task. Right click on the data conversion task and choose edit, this brings up a window which shows the list of columns in the data source. One check on the columns that need to be converted from to unicode to non-unicode. Here is a snapshot of the data conversion task editor: First Choose the input column, then rename the output alias if needed, then choose the non-unicode data type you want the source data to be converted to, repeat the steps for all the input columns that are selected and then click ok.

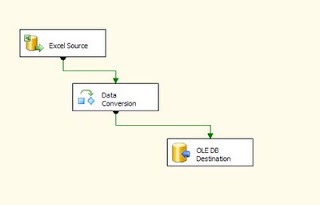

Once the Data conversion task editing is complete, connect it to the ole db destination. Here is an illustration of the data flow task with the Data Conversion task included.

Thursday, May 12, 2011

Tuesday, May 3, 2011

Splitting Queries...

I ran into a issue with a query which was running very slow. The query was returning only two columns but there was join between six to seven tables. When we had to move to a different server for development, the performance of the query went down even further. On examining the query, i found certain conditions in the where clause which were being repeated, also there were two conditions as part of the where clause which were linked by a OR condition like . One of the approaches i took was that I took the query with the seven table join and split it into two queries. I re-ran the query after the split and the performance greatly improved, it was taking 30 mins to execute when it was one big query. After the split i got the same results and execution time was 3 minutes. In a nutshell where there are lot of tables say more than 4 being joined, one needs to analyze the query and see if the results can be achieved in a faster manner in case the performance is bad.

Subscribe to:

Posts (Atom)