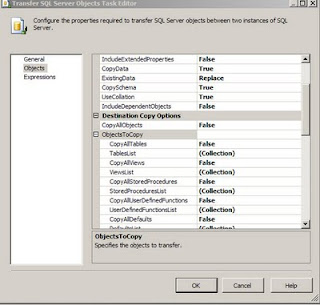

I was working on a project where in i had to convert the DTS packages which copied data from source tables into destination tables (both SQL Server Databases) into SSIS packages. One of the tasks used in the SSIS package was the Transfer SQL Server Objects task. In this task certain tables had to be copied from source Sql Server DB to Destination Database. In the Transfer SQL Server Objects task editor (this can be opened by highlighting the task, right click on it and choose the Edit... Option), there is an option called TableList Under the ObjectsToCopy Section which is under the Destination Copy Options ( which is part of the Objects Section). When one clicks on the three dots one can see the table list and the tables that need to be copied can be checked.

In case the number of tables is large and in case one wants to find out which tables have been choosen to be copied over, there is easier way to look at the members in the table collection. One has to highlight the Transfer SQL Server Objects task and right click and choose properties. In the list of properties choose the TableList under the Misc Category and click on the three dots, one gets to see the following window which shows the tables that have been selected, for the purpose of confidentiality i have erased the table names in the graphic.

I wanted to highlight the use of properties for this task to view the Collection of the tables.

Wednesday, July 27, 2011

Thursday, July 21, 2011

High Availabilty...

In continuation with my post regarding Production server being down, I am evaluating possible High availability options, here are some key points. There are lot of discussions on MSDN and there are blogs which explore this deeper.

I have listed some points here.

I have listed some points here.

Both Log shipping and Mirroring have two copies of same db, ( Log shipping can have many copies) , but the secondary dbs are READ only, unlike the contingency dbs, this can be modified.

Log shipping backup T- log interval will determine data-lose risk, for example, if we backup T- log file every 5 minutes, there might be loss of data within 5 min.

Mirror is the latest SQL technology, it requires more hardware resource, especially network bandwidth, The Mirror can be configured to insure no data-lose during disaster, but performance will be little low.

Cluster is for high availability (least downtime) for SQL server instance, also if active/active cluster is used one can get better performance, but it needs Windows clusters. Cluster itself is not for disaster recovery,(it only has one copy of all dbs). One can use Redundancy Disk Array to prevent disk failure.

One can also can combine with logshipping and cluster together to get both high availability and disaster recovery.

Log shipping/Mirroring provide database level high availability, so objects like logins, linked server, Jobs, SSIS packages have to be manually created on secondary servers. One of the parameters that need to be considered for Log Shipping and mirroring is also the proximity of the failover server, if this is in a far off place there could be some issues with how soon the failover server can take over.

Cluster provides server level high availability, so no need to worry about logins, linked server, Jobs, SSIS packages.

Cluster provides server level high availability, so no need to worry about logins, linked server, Jobs, SSIS packages.

Wednesday, July 20, 2011

SQL Server Denali CTP3...

Microsoft has released the CTP3 version of SQL Server Denali, yet to get around and install this version. Here are a couple of links talking about SQL Server Denali CTP3.

As per MSDN article:

SQL Server Code Name “Denali” is a cloud-ready information platform that will help organizations unlock breakthrough insights across the organization and quickly build solutions to extend data across on-premises and public cloud backed by mission critical confidence

http://www.microsoft.com/sqlserver/en/us/future-editions.aspx.

http://redmondmag.com/articles/2011/07/13/microsoft-releases-sql-server-denali-ctp3.aspx

As per MSDN article:

SQL Server Code Name “Denali” is a cloud-ready information platform that will help organizations unlock breakthrough insights across the organization and quickly build solutions to extend data across on-premises and public cloud backed by mission critical confidence

http://www.microsoft.com/sqlserver/en/us/future-editions.aspx.

http://redmondmag.com/articles/2011/07/13/microsoft-releases-sql-server-denali-ctp3.aspx

Monday, July 11, 2011

Failover Database Server...

I ran into a issue last week where in the main production server went down in the morning and it threw off all our key processing. We were dead in the water for 2-3 hours and then the contingency server had to be used but then the data on this server was not current. A process of restoring database on the contingency server had to be undertaken and by the time the contingency server was up and running with the latest production data one full day had been lost and the processing and the reports that had to be generated were not complete. There was another set of tasks which had to be undertaken to complete the lost day's processing and bring all the data upto date. When the experts were called to troubleshoot the issue with the database production server, first there were some patches applied and then there were attempts to reboot the server which failed. On further analysis it was found that there were some HBA cards which were faulty which caused the server and SAN communication to breakdown. I am still not sure what the exact cause link here is between the SAN and the server to go down. There were lessons learnt because of this server failure, one is to have a failover server which would take over if the Primary goes down. I am working on putting together an environment where the contingency is as close to production as possible in terms of data and setup. This kind of server shutdown caused a lot of lost hours for the line of business and also there was lot of stress and conflict. I hope to avoid such a situation by more thoughtful planning and implementation.

Subscribe to:

Posts (Atom)